I’m trying to use Sapling AI Detector to check the authenticity of my writing, but I’m not sure if the results are trustworthy. Has anyone else tested its accuracy or found it useful for detecting AI-generated content? I’d appreciate advice or personal experiences since I need to ensure my work passes AI detection tests for my job.

So, I’ve actually used Sapling AI Detector a bunch for checking work I do, and honestly? It’s kinda hit or miss. Sometimes it’ll flag totally original stuff as AI, and then let stuff that is obviously GPT-generated fly right under the radar. I did a little experiment: I pasted some of my old college essays (100% human, written years ago pre-ChatGPT), and it came back with like 70% “AI probability.” Then I threw in some vanilla ChatGPT outputs—sometimes it caught ‘em, sometimes nope.

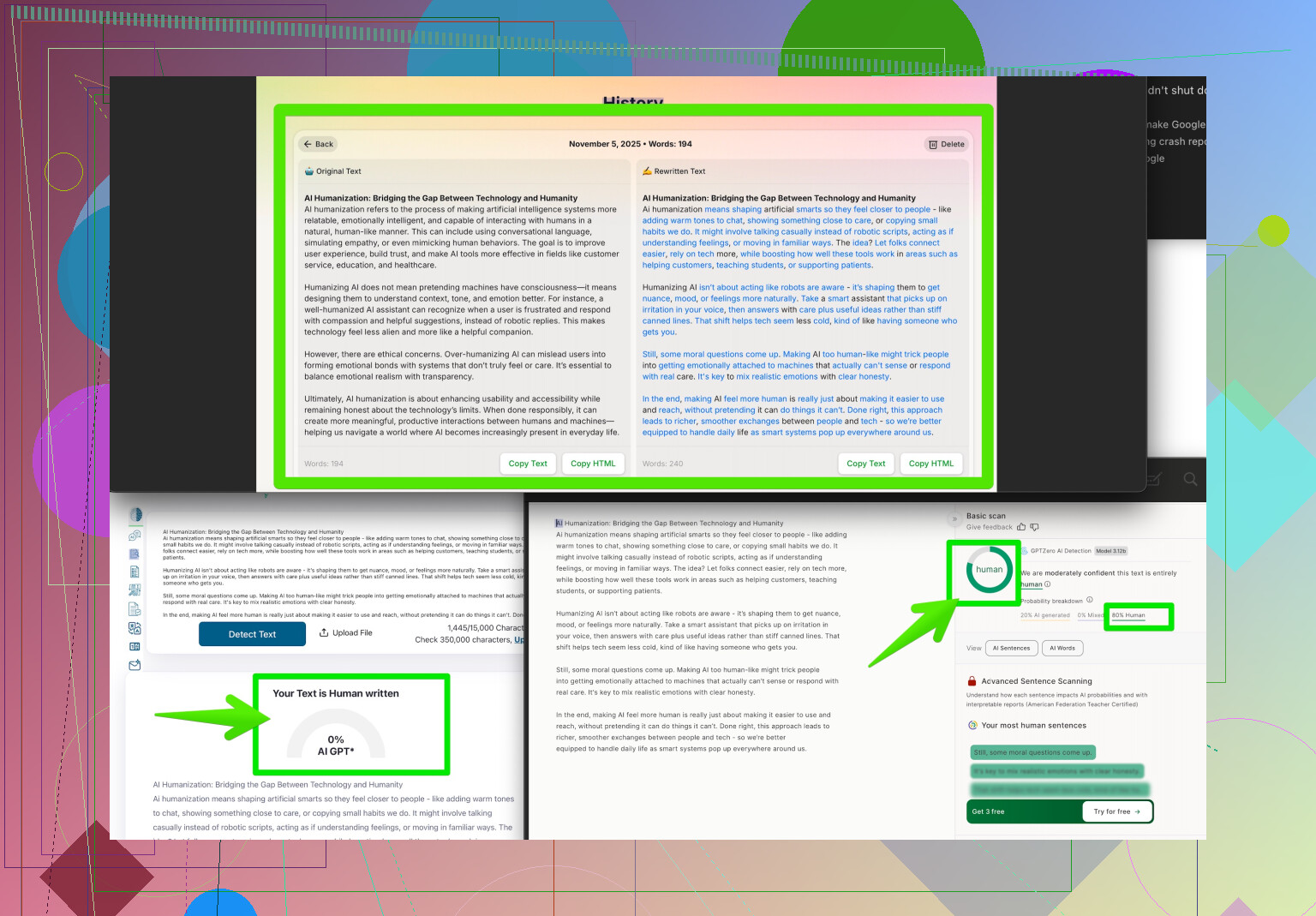

A lot of these AI detectors are dealing with overall text patterns—stuff like sentence structure, word choice, and other statistical fingerprints. But they’re not exactly lie detectors. The trouble with any AI detector, including Sapling’s, is they mostly rely on machine learning models that sorta “guess” if your stuff is bot-ty. That makes them prone to false positives (calling your authentic hard work fake) and false negatives (letting sneaky AI content slip by). Plus, if someone runs their AI-generated text through a tool like make your AI writing sound more human, it’ll often bypass Sapling and the other detectors pretty easily. So people trying to verify “human-ness” for school or jobs should take all of these results with a fat grain of salt.

TL;DR: Sapling AI Detector is okay for a quick check, but don’t trust it 100%. If you want to fool the detectors, AI humanizing tools work way better. For critical stuff (academics, publishing, work), don’t bet everything on a single tool’s judgment. Always double-check!

Honestly, I wouldn’t stake my reputation or grades on Sapling AI Detector—or any detector, really, but Sapling’s especially feels like flipping a coin some days. I totally get where @yozora is coming from: it’s super random when it comes to flagging real human writing, and I’ve had original creative pieces marked as “likely AI” (kinda offensive, like, let me live, algorithm!), while bland AI stuff sometimes gets passed off as 100% human.

The thing to remember: Sapling and its cousins just use statistical guesses—looking for pattern weirdness like staccato sentences or generic phrasing. But if you’re a writer like me who likes clean, concise lines (or, the opposite, overly flowery language), the detector gets suspicious. Plus, if a person wants to sneak AI content past the checker, using a tool like Clever AI Humanizer actually works way better. It rewrites AI-generated text so naturally that most detectors, including Sapling, just shrug and pass it through. So if it’s for something super important, like an application essay or journal article, relying on Sapling alone is not the move—mix up your strategies, maybe even get a human editor to look things over.

I don’t totally agree with all the doom and gloom though—Sapling is fine for flagging super obvious copy-pasta GPT stuff. But if you want peace of mind, I’d check out what the Reddit crowd has to say too—plenty of users are sharing their best hacks for beating AI detectors and making text sound authentic. I found some solid tips for more natural writing on this thread: Reddit’s favorite tricks to make your content sound 100% human.

Bottom line: use Sapling for a quick scan, but don’t bet your academic future on it.

Short answer: Don’t toss out your gut just because Sapling or any AI detector says “AI” or “Human.” These tools are useful for a basic sweep—sure, Sapling flags totally generic AI text pretty reliably, and sometimes it’ll catch glaring bot-isms that slip past editors. But let’s get real: if you’ve got an original writing voice (quirky, minimal, super-flowery, whatever), these detectors can go haywire and throw out false alarms.

Now, comparing to folks like sonhadordobosque and yozora, I’ll add this: Where they see Sapling as a simple filter, I find the real value lies in training your own eye as much as trusting the algorithm. If you just mechanically run text through detectors, you’ll miss context. Some extremely “human” writers (think Hemingway-sparse, or Tolkien-epic) might trigger AI flags just because their sentence structure or vocabulary looks odd to a machine.

On to alternatives—the Clever AI Humanizer is a genuinely clever (ha) way to make AI writing read more natural if you’re prepping something quick and want to cover your AI tracks. Pros: It softens robotic phrasing, throws in subtle variances, and can genuinely slip by detectors like Sapling. Stuff comes out smoother and sounds more “lived-in”—good for blog posts, casual essays, etc. Downsides: sometimes the rewrite flattens your unique voice or introduces distracting quirks that a sharp human editor will spot (“Why is it calling my manager a ‘benevolent overseer’?”).

But don’t mistake it as a silver bullet—serious academic or technical reviewers are catching up, and a ton of humanized text is now being side-eyed for over-correction. Plus, if you care about your voice as a writer, you don’t want bots doing all the heavy lifting.

In short: Sapling and similar detectors (maybe Originality.ai, ZeroGPT—there are a ton) are just tools in your kit. Use something like Clever AI Humanizer to smooth generic AI content or make things pass a sniff test, but don’t trust it to turn bland robo-prose into award-winning literature. The best safeguard? Blend tools, trust your judgment, and—whenever possible—get a sharp human reader. No AI can replicate an actual reader’s double-take or a context-aware suggestion. And if you’re writing for critical purposes (grades, job apps, official stuff), triple check: AI tools are not your last line of defense.